How CXL Technology Solves Memory Problems in Data Centres (Part 1)

发布时间:2024-12-18 15:12:02来源:互联网

Compute Express Link (CXL) represents a novel device interconnection technology standard that has emerged as a significant advancement in the storage sector, particularly in addressing memory bottlenecks. CXL serves not only to expand memory capacity and bandwidth but also facilitates heterogeneous interconnections and the decoupling of resource pools within data centres. By enabling the interconnection of various computing and storage resources, CXL technology effectively mitigates memory-related challenges in data centres, resulting in enhanced system performance and efficiency.

CXL The Emergence of Technology

The rapid advancement of applications such as cloud computing, big data analytics, artificial intelligence, and machine learning has resulted in a significant increase in demand for data storage and processing within data centres. Traditional DDR memory interfaces present challenges related to overall bandwidth, average bandwidth per core, and limitations concerning capacity scalability. This is particularly critical in data centre environments, which face numerous memory-related constraints. Consequently, innovative memory interface technologies, such as CXL, have emerged to address these issues.

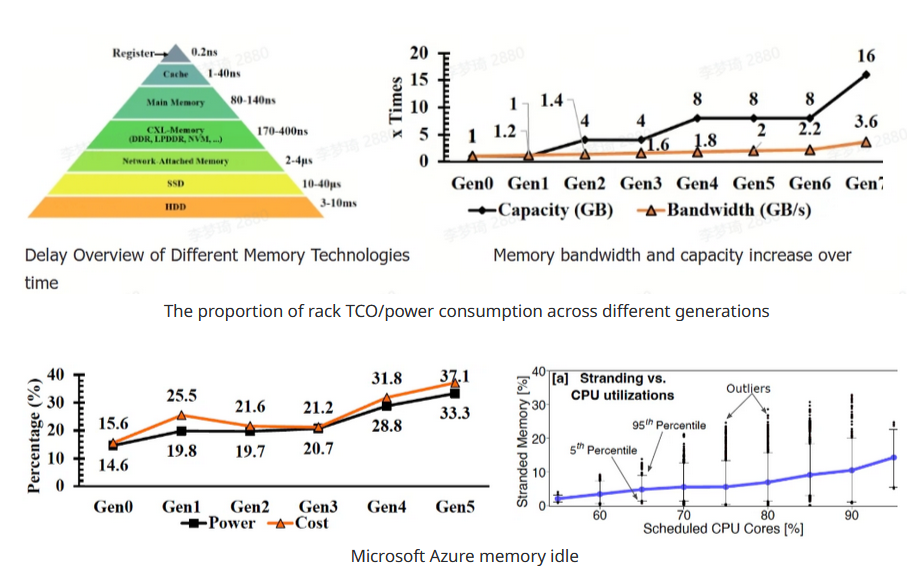

In data centres, the relationship between CPU and memory remains tightly coupled, with each generation of CPUs adopting new memory technologies to attain higher capacity and bandwidth. Since 2012, there has been a rapid increase in the number of CPU cores; however, the memory bandwidth and capacity allocated to each core have not experienced a corresponding increase and have decreased. This trend is expected to persist in the future, with memory capacity advancing at a faster rate than memory bandwidth, which could significantly impact overall system performance.

Furthermore, there exists a substantial delay and cost gap between DRAM and SSD, which often leads to the underutilization of expensive memory resources. Incorrect calculations and memory ratios can easily result in memory idleness, manifesting as stranded memory resources that cannot be effectively utilized. The data centre sector, recognized as one of the most capital-intensive industries worldwide, exhibits low utilization rates, posing a considerable financial burden. According to Microsoft, 50% of the total costs associated with servers are attributable to DRAM. Despite the high expense of DRAM, it is estimated that approximately 25% of DRAM memory remains unutilized. Internal statistics from Meta reflect similar trends. Notably, the proportion of memory costs relative to total system costs is rising, shifting the primary cost burden from the CPU to memory resources. Employing CXL technology to create a memory resource pool may provide an effective solution by dynamically allocating memory resources and optimizing the computation-to-memory total cost of ownership (TCO) ratio.

The industry has been actively pursuing the adoption of new memory interface technologies and system architectures in response to traditional memory limitations.

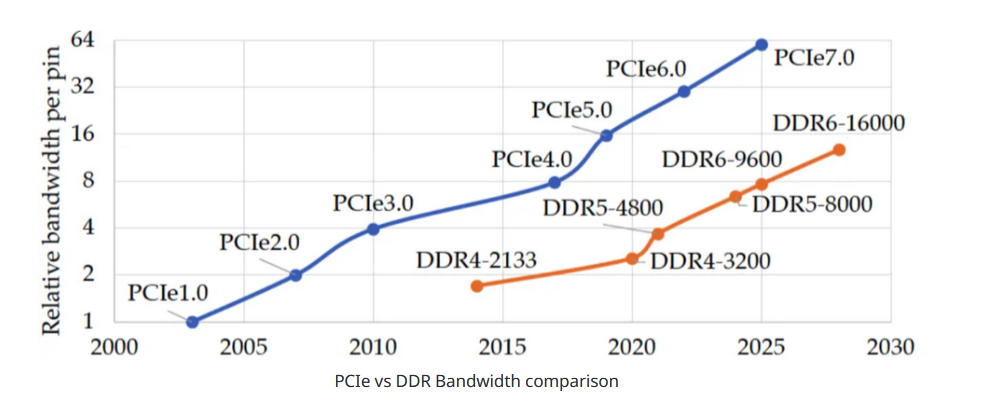

Among various memory interface technologies, PCI-Express (Peripheral Component Interconnect Express) has emerged as the predominant choice. As a serial bus, PCIe presents certain challenges, including relatively high communication overhead between different devices from both performance and software perspectives. However, there is encouraging news: the PCIe 7.0 specification was completed at the end of 2023, promising data transfer speeds of up to 256 GB/s. While the current PCIe 4.0 edition, with a data rate of 16 GT/s, is not yet universally adopted, the advancement is expected to progress in the coming years. The primary impetus driving the development of PCIe is the escalating demand for cloud computing solutions. Historically, PCIe has seen its data transmission rates double every three to four years, demonstrating a consistent trajectory of innovation and enhancement within the field.

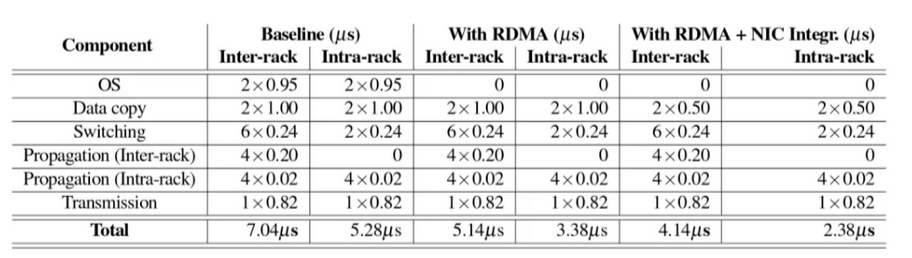

The system architecture has experienced multiple generations of evolution. Initially, the focus was on facilitating the sharing of a resource pool among multiple servers, typically employing RDMA (Remote Direct Memory Access) technology over standard Ethernet or InfiniBand networks. This initial implementation often led to higher communication delays, with local memory access times being several tens of nanoseconds compared to RDMA, which typically resulted in delays of a few microseconds. Additionally, this method demonstrated lower bandwidth and failed to provide essential features such as memory consistency.

In networks utilizing 40 Gbps link bandwidth, the total achievable round-trip delay is influenced by various components that contribute to increased latencies.

The adoption of 100 Gbps technology can effectively reduce data transmission delays by approximately 0.5 microseconds.

In 2010, CCIX emerged as a potential industry standard, driven by the necessity for faster interconnections exceeding the capabilities of existing technologies, as well as the requirement for cache coherence to facilitate expedited access to memory in heterogeneous multiprocessor systems. Despite its reliance on PCI Express standards, CCIX has not gained significant traction, primarily due to insufficient support from pivotal industry stakeholders.

Conversely, CXL builds on the established PCIe 5.0 physical and electrical layer standards and ecosystem, incorporating features of cache consistency and low latency for memory load/store transactions. The establishment of industry-standard protocols endorsed by numerous major players has rendered CXL pivotal for advancing heterogeneous computing, resulting in robust industry support. Notably, AMD's Genoa and Intel's Sapphire Rapids processors have supported CXL 1.1 since late 2022 or early 2023. Consequently, CXL has emerged as one of the most promising technologies within both the industry and academia for addressing this critical issue.

CXL operates on the PCIe physical layer, leveraging existing PCIe physical and electrical interface features to deliver high bandwidth and scalability. Moreover, CXL presents lower latency compared to conventional PCIe interconnects, alongside a unique array of features that enable central processing units (CPUs) to interact with peripheral devices—such as memory expanders and accelerators and their associated storage—within a cache-coherent framework, utilizing load/store semantics. This innovation ensures consistency between CPU memory space and additional device memory, thereby facilitating resource sharing that enhances performance while streamlining software stack complexity. Memory-related device expansion represents one of the primary target scenarios for CXL implementation.

Flex Bus x16 Connector/Slot

see more information:https://www.ruijienetworks.com/support/tech-gallery/how-cxl-technology-solves-memory-problems-in-data-centres-part1

免责声明

【慎重声明】 凡本站未注明来源为“默认站点”的所有作品,均转载、编译或摘编自其它媒体,转载、编译或摘编的目的在于传递更多信息,并不代表本站赞同其观点和对其真实性负责。 如因作品内容、版权和其他问题需要同本网联系的,请在30日内进行!